By Luke Smith · 2/25/2016

Data centers are one of the vital gears that keep our modern technology running. With the number of online services available today, data centers need constant functionality. Our business and personal lives have never been more dependent on data centers operating smoothly. The Ponemon Institute recently released their third report on the effects of data center outages, revealing the cost impact on data center downtime. With the high economic costs related to these incidents, understanding and evaluating a data center's critical infrastructure has never been more important.

A data center experiences downtime due to a number of issues: UPS system failure, cyber crime, human error, water, heat, or CRAC failure, weather, generator failure, and IT equipment failure. The most common cause of a data center outage is UPS system failure, accounting for 25% of the outages reported by the sample 63 different data centers polled by the Ponemon Institute. Least common was a failure with IT equipment, making up only 4% of the outages experienced. Of the three times that the Ponemon Institute has conducted their surveys, the most common causes of outages have followed roughly the same trend. UPS system failure is consistently the most common cause, followed by human error, with IT equipment failure being the least common. Although there has been little change with most categories, the percentage of outages due to cyber crime has increased substantially. In 2010, cyber crime, like DDoS attacks, accounted for 2% of all data center outages. Today, however, 22% of data center outages are a result of cyber crime.

Regardless of the cause, downtime can cost companies hundreds of thousands, if not millions, of dollars. While there are direct costs associated with an outage, like repairing or replacing damaged equipment, there are also indirect consequences that cost the company. Shareholders and investors may lose confidence, and customers may become dissatisfied. The actual cost of an outage varies, from $70,512 to $2,409,991. The average reported cost was $740,357, 7% higher than 2013 and nearly 50% higher than 2010.

There are several ways to evaluate the cost impact of data center outages:

- Outage Cause - While IT equipment failure was the least common cause of data center outages, it was the costliest, averaging $995,000 per incident. In a close second, however, was cyber crime, costing an average of $981,000 per incident.

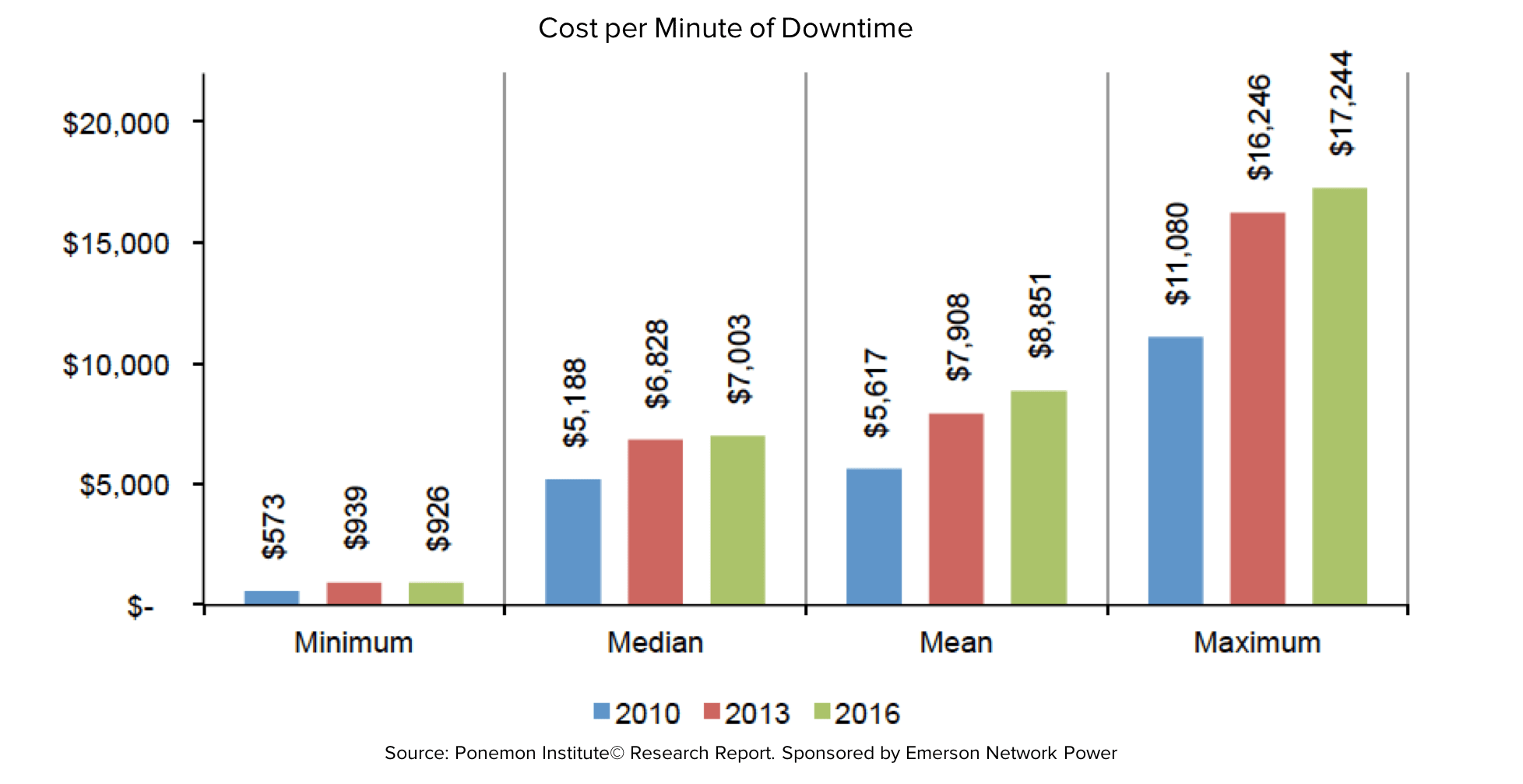

- Outage Duration – The longer your data center is down, the more money you can lose. The average incident cost $8,851 per minute, with the most expensive outage costing $17,244 per minute. With the average outage lasting 95 minutes and the longest lasting 415 minutes, every minute is costly.

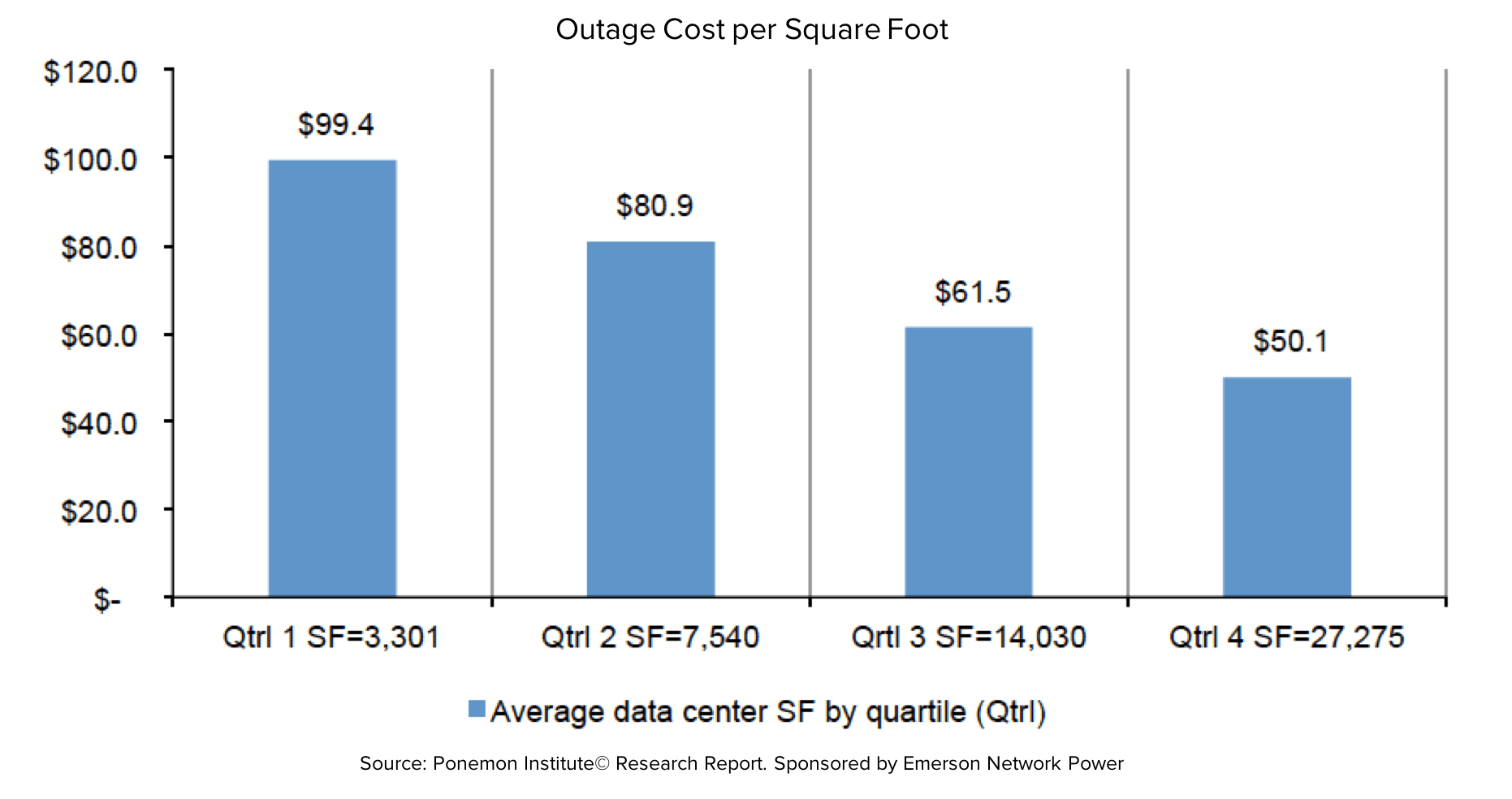

- Data Center Size – Though not exact, larger data centers pay less per square foot for outages than smaller ones. The total amount lost, though, is higher for larger data centers.

As the world becomes more connected, the importance for data center uptime increases. While the cause of data center outages remains mostly the same, the cost per incident has increased substantially since 2010. To prevent further outages, companies have to ensure they have sufficient critical infrastructure in place to provide the needed services for business success.